TTA for Image Classification

Enhancing image classifiers with test-time adaptation techniques

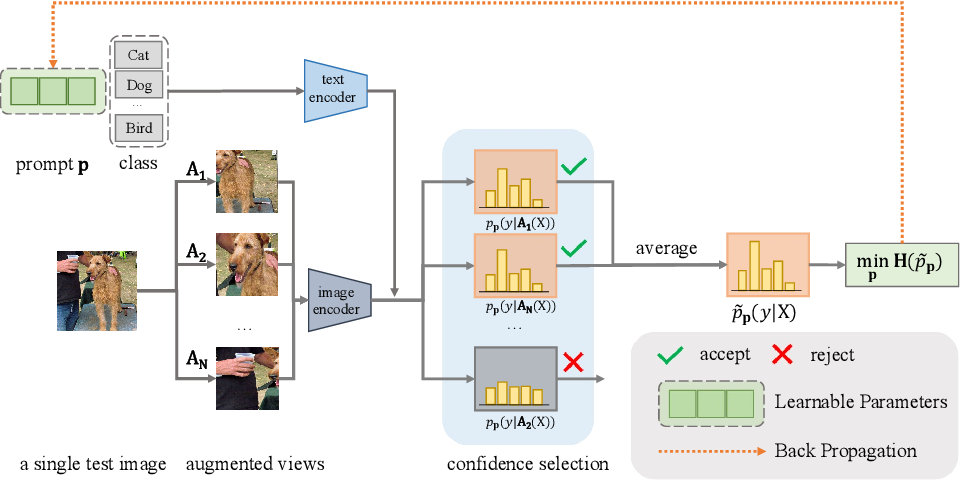

This project explores Test Time Adaptation (TTA) methods to optimize image classifier performance during inference. Unlike traditional fine-tuning that requires extensive computational resources and labeled datasets, TTA adjusts models dynamically at test time, making it an efficient alternative for real-world applications.

Key Objectives

- Dynamic Model Adaptation: Enable real-time model improvements during inference without retraining.

- Innovative Augmentation Techniques: Evaluate and develop cutting-edge image augmentation strategies to improve generalization.

- Context-Aware Prompt Engineering: Refine zero-shot classification prompts to improve their alignment with input data.

- Comprehensive Evaluation: Benchmark proposed methods against industry-standard datasets like ImageNet-A.

Background and Motivation

The Challenge of Fine-Tuning

Traditional fine-tuning approaches, while effective, often require:

- Extensive computational resources for retraining large-scale models like CLIP.

- Access to large, labeled datasets that may not be feasible in many scenarios.

The TTA Advantage

TTA offers a lightweight and adaptable alternative, dynamically improving model performance during inference. This is particularly valuable for deploying models in resource-constrained environments or handling unseen data distributions.

Project Contributions

Task 1: Image Augmentation

Objective:

Enhance TTA performance through advanced image augmentation techniques.

Techniques Explored:

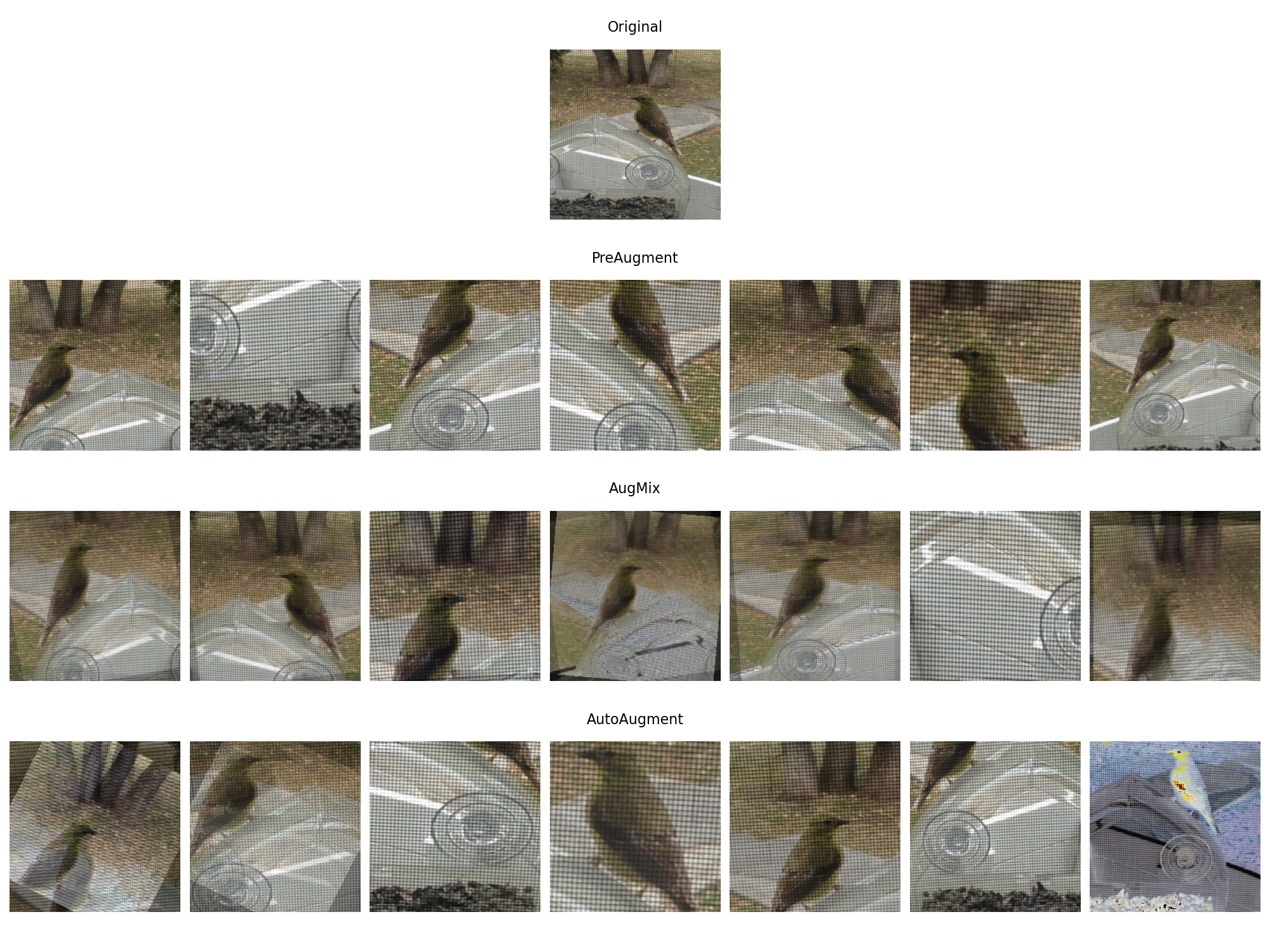

- PreAugment: Baseline method applying straightforward augmentations.

- AugMix: Combines multiple augmentations to improve robustness against distribution shifts.

- AutoAugment: Learns augmentation policies optimized for the dataset.

- DiffusionAugment: Leverages generative diffusion models to synthesize diverse augmentations.

Results:

Augmentations significantly improved the model’s ability to generalize to out-of-distribution data, as seen on ImageNet-A benchmarks.

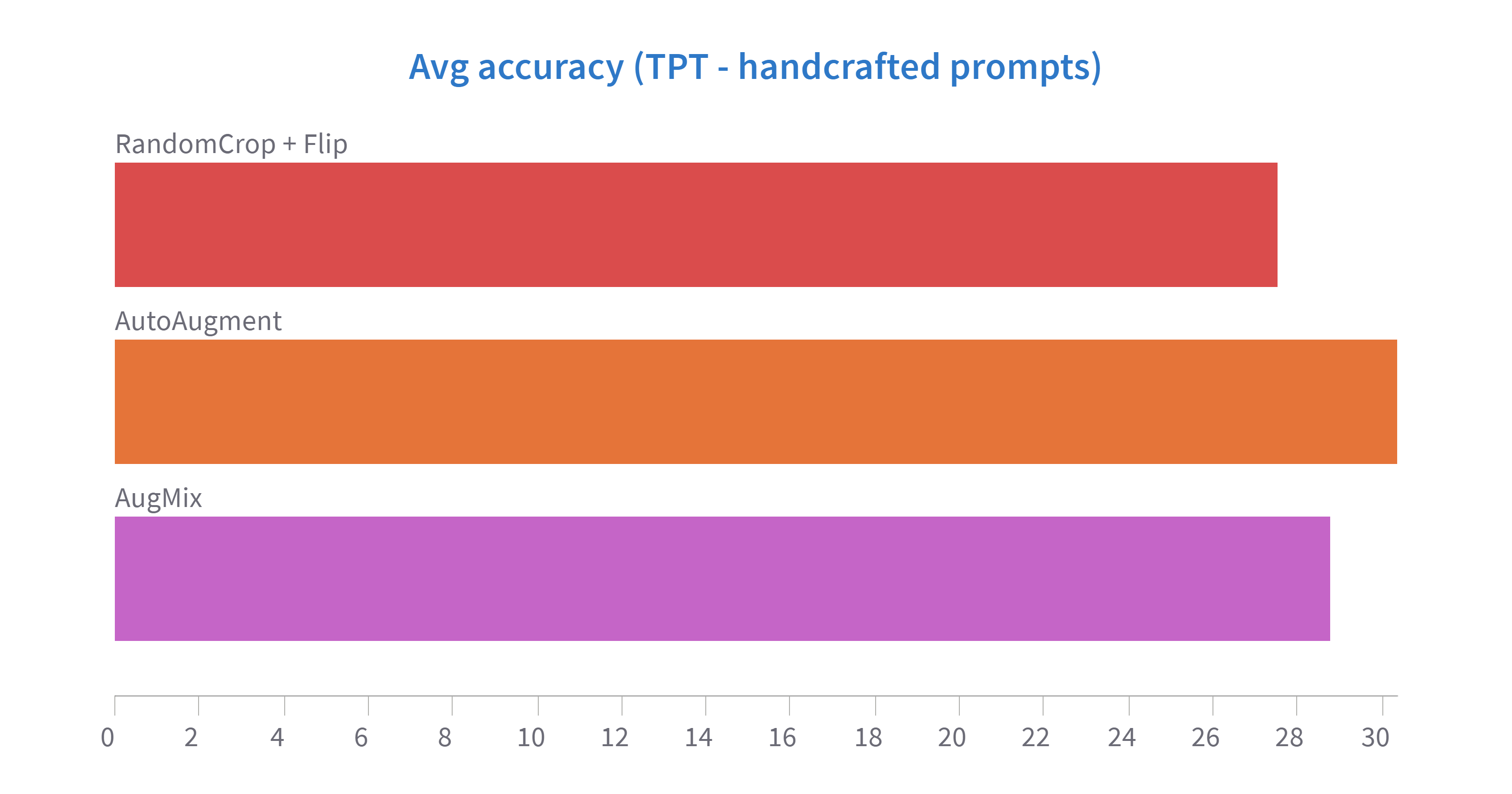

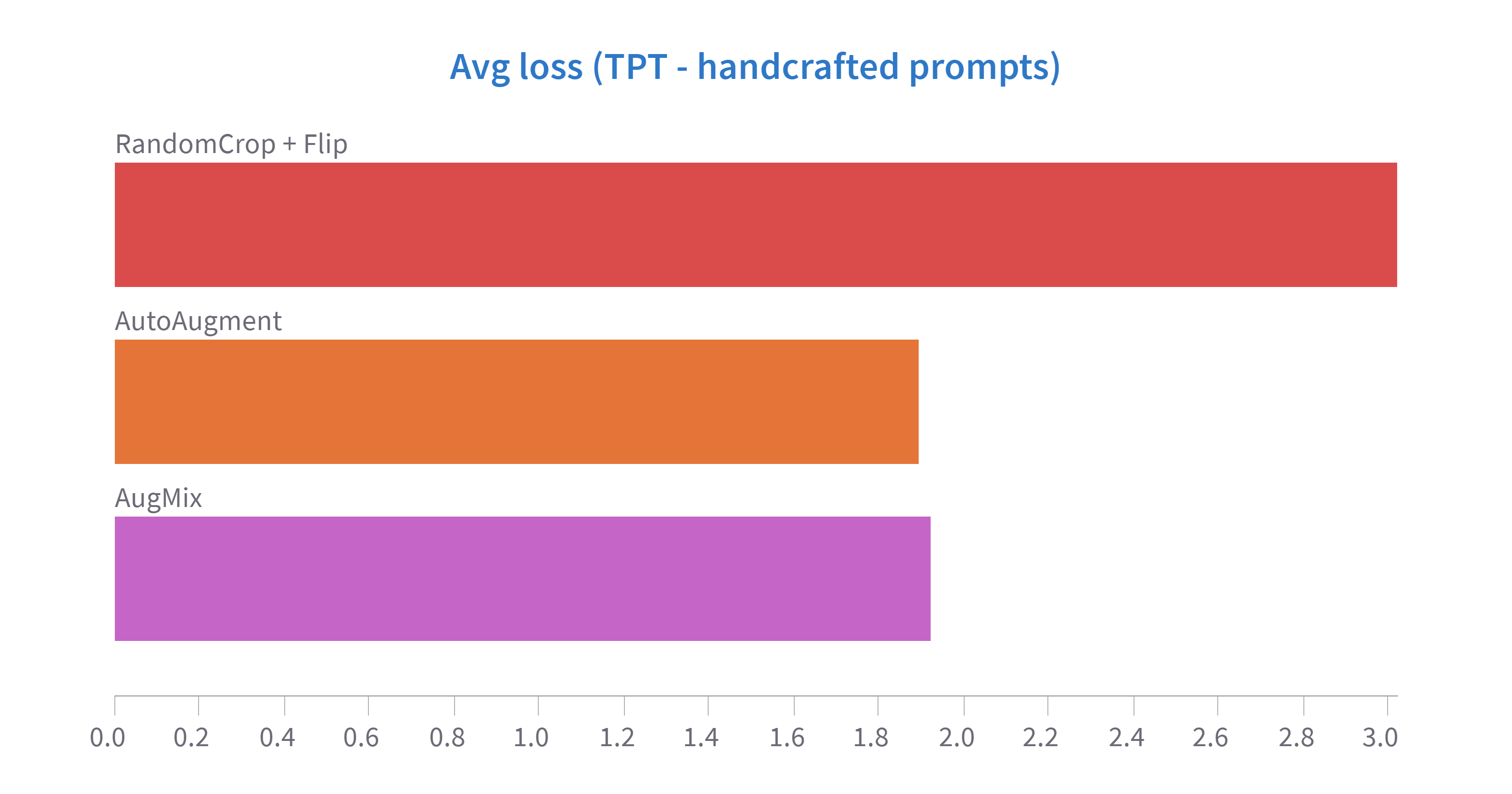

| Augmentation Technique | Avg Accuracy (%) |

|---|---|

| PreAugment | 27.51 |

| AugMix | 28.80 |

| AutoAugment | 30.36 |

| DiffusionAugment | See notebook |

Task 2: Prompt Engineering

Objective:

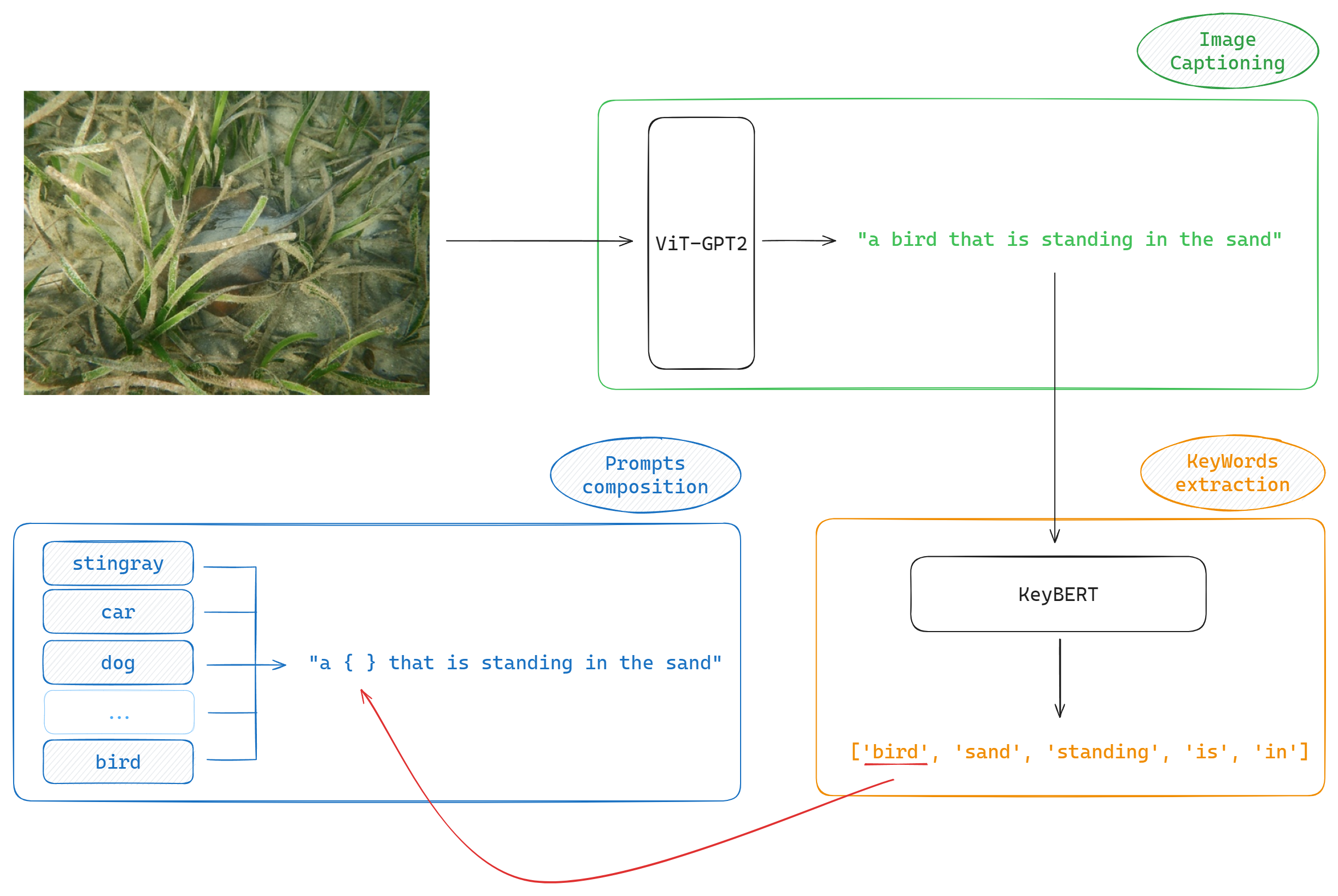

Refine prompt templates for zero-shot classification in CLIP to enhance model alignment with input data.

Approach:

- Baseline Prompts: Standard templates like “a photo of a {label}.”

- Dynamic Prompts: Generated contextually using an image captioning system, creating prompts tailored to the specific content of the image.

Results:

Dynamic prompts showed potential for better contextual alignment but slightly underperformed compared to the baseline in accuracy.

| Method | Avg Loss | Avg Accuracy (%) |

|---|---|---|

| Baseline | - | 47.87 |

| Dynamic | 2.5711 | 42.13 |

Technical Highlights

- Backbone Model: Leveraged OpenAI’s Contrastive Language–Image Pretraining (CLIP) for its zero-shot classification capabilities.

- Augmentation Design: Introduced novel augmentation pipelines to enhance model robustness.

- Evaluation Framework: Conducted rigorous testing on challenging datasets, with insights documented in the project’s notebook.

Results and Achievements

- Developed and benchmarked advanced augmentation techniques, with AutoAugment achieving the best performance.

- Demonstrated the potential of prompt engineering for improving zero-shot classification.

- Highlighted areas for future work, including better dynamic prompt generation and augmentation fine-tuning.

Resources

- Code Repository: GitHub Repository

- Additional Details: Project Page